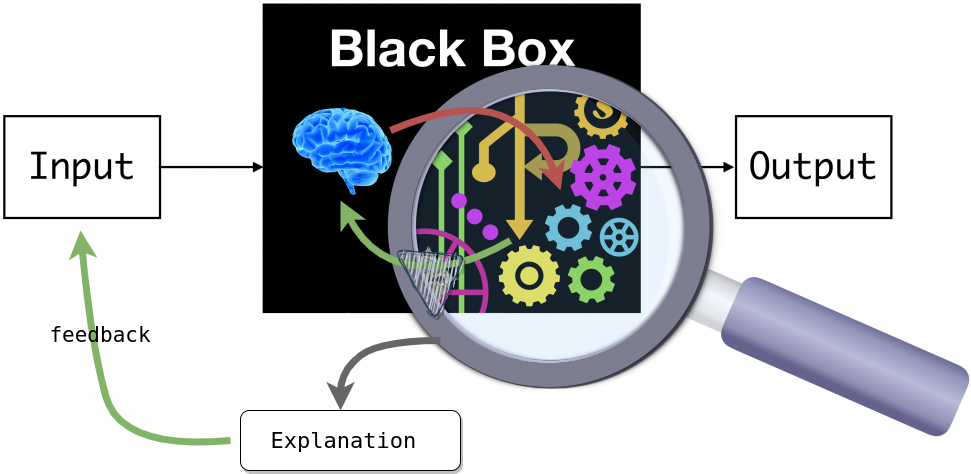

I am excited about the research which advances biomedical research using modern statistical techniques. I am particularly interested in Developing interpretable AI models, leveraging ideas of causation in deep learning, and developing neuroscience-inspired models for perception.

I have previously submitted my works at Frontieres, MedIA, and TMI journals, along with MICCAI, ICONIP, and AAAI conferences.

Some of my ongoing projects include:

- Symbolic Interpretability of deep learning models github

- Ante-hoc interpretability: Adaptive regularization technique for directed learning github

Apart from these, I’m also interested in Causal learning works that try to add elements of causation in deep network training (interesting paper). Please check out my previous projects here. Feel free to get in touch if you want to collaborate or want to discuss anything.