Disclaimer: All these points mentioned are from my own personal viewpoints.

There are plenty of wild statements about artificial intelligence doing the rounds – ranging from a threat to our jobs to a threat to the human race as we know it. So, do all these statements have any statistical significance? Do they have any experimental origin? Let us try to understand it. So what is artificial intelligence? In the most fundamental sense, AI is all about Algorithms Enabled By Constraints, Exposed By Representations, That Supports The Models Which Are Targeted At Thinking, Perception, and Action. The concept of artificial intelligence is to enhance the abilities of computers to think and analyze the data as humans do. These tasks can be Object detection, Speech processing, Language translation and sometimes even imagination. Yes, you read it right, also for imagination. Broadly speaking, anything can be considered artificial intelligence if it involves a program doing a task that we normally think would rely on the intelligence of a human. Exactly how this feat is achieved is not the point – just the fact that it can be done is a sign of artificial intelligence.

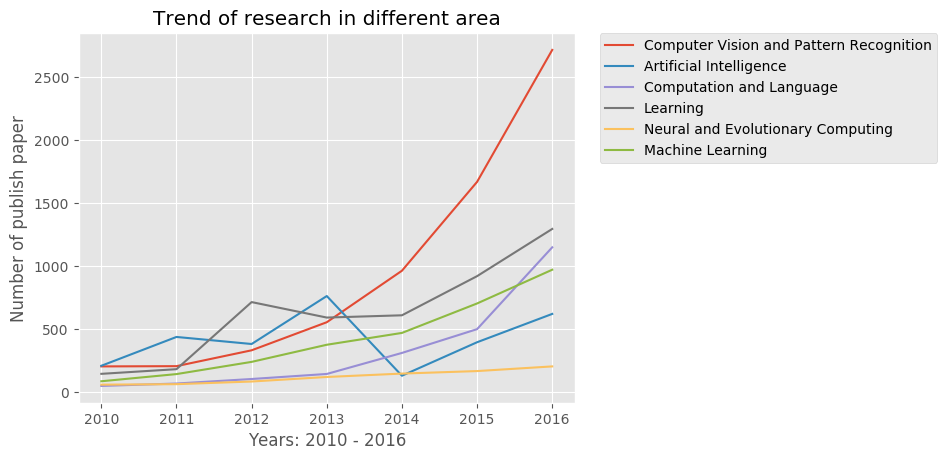

Andrew Ng once said that “If a typical person can do a mental task with less than one second of thought, we can probably automate it using AI either now or in the near future.” Research in this domain is moving at a very accelerating phase.

There have been remarkable advances in AI. Today we can tell a voice-activated personal Google assistant to “Set an appointment” or rely on Facebook to tag our photographs; Google Translate is often almost as accurate as a human translator; EA Sports uses AI to model their real looking characters; These days even radiologists depend upon AI for diagnosis; billions of dollars in research funding and venture capital have flowed towards AI; It is one of the most sought after course in any engineering program. These days, researchers from the fields of CS, CFD, Astronomy are using various AI tools to advance their research.

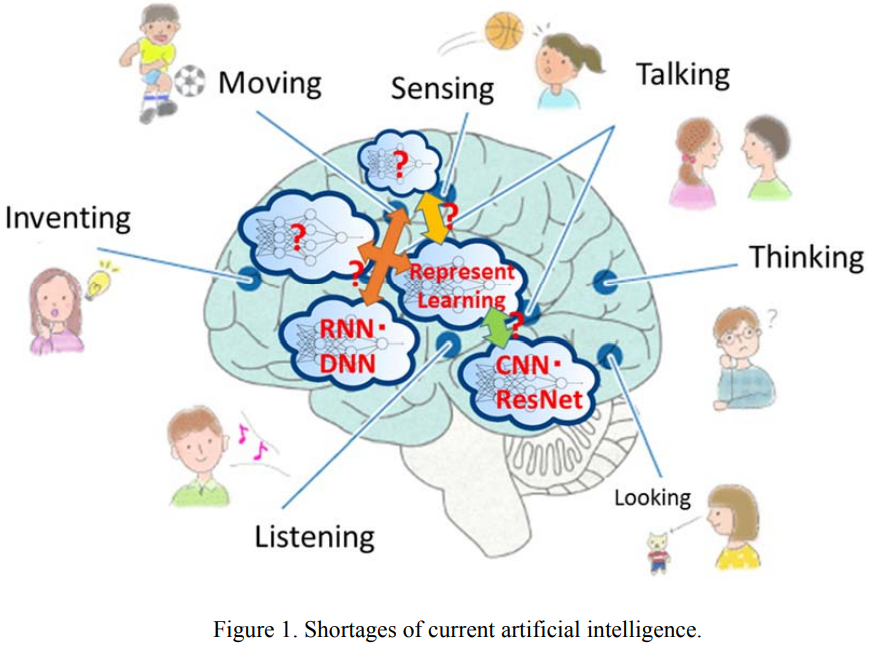

However, current AI engines are nowhere near achieving the capabilities of a human brain. As seen in the above figure 1. AI can’t do or handle many things which human brains can very easily perform. Understanding the intricacies and nuances of the human language and multitasking are still beyond the reach of AI (though we are slowly advancing in that direction).

Building a Brain

Researchers and engineers took this challenge head-on; there have been multifarious competing approaches in this respect. One of the earliest approaches was a rule-based approach in which computers were fed with all the information and rules about the world, which required programmers to laboriously write software that is familiar with the attributes such as edge, corner, etc. This approach took a huge amount of time, and yet it left the systems incapable of dealing with ambiguous data, such approaches are limited to very narrow and controlled applications. Most of the recent methods involve building an intelligent machine to provide humongous amounts of data to a network (computer) and expect it to learn from that data. This kind of rule-free approach has taken AI research to a whole new level. In this rule-free approach, the basic building block is a neuron (sigmoidal neuron), which has quite a few properties of the neurons in the human brain but still lags behind considering many other factors.

However, this rule-free approach has lots of dis-similarities/ disadvantages when compared with the human brain some of which are:

-

Requirement of humongous amounts of data to train the model, just to achieve state-of-the-art performance in just one single task

-

Highly dependent on the viewpoint of observation(Consider the problem of object detection: Current AI can’t find similarities between the images which are taken from the front and side views)

-

If we compare the efficiency of the human brain with an AI engine in terms of power consumption, a human brain uses remarkably low power to function, at the same time an AI engine needs multiple GPU’s, servers to match the speed of the human brain but for just doing one single task.

-

Associative memory linkages in the human brain are still not modeled using DL tools

If we dive still deeper into mathematics and neuroscience we find lots of interesting differences:

-

All the deep learning models once trained to behave as a deterministic problem which is not the case in the human brain. In the human brain, it is said that each and every activity can be modeled using oscillatory models of the neuron, which means the temporal information is exploited to a large extent in the human brain, but not in the case of Deep Learning models. The models should somehow include probabilistic temporal features in prediction.

-

Is Bayesian Learning a future? Bayesian Learning is a very powerful tool to model the probabilistic distributions just from the data, which is not very parameter intensive but computationally very very expensive. If we see in that way Bayesian models should make more sense. There’s huge research in clubbing the ideas of Bayesian learning with deep learning

-

Other methods are to change the structure of a basic neuron from sigmoidal or spike-triggered to a much more robust and complex form involving an oscillatory model, which includes temporal information.

-

-

Human brain deals with the signals with certain phase and amplitude i.e the brain signal exists in the complex domain but current state-of-the-art deep learning models operate in Real domain. Is this a problem? Well if evolution has selected a way to map signals in the complex domain that means it should be highly efficient. But the main problem is in executing the models which are built in the complex domain because current GPU’s can only handle real number operations. To give a small flavor: Update of complex weights will result in changes in both amplitude and phase, which is not the case in real weights. The optimization strategy involved in the complex domain is entirely different from real domain optimization.

-

EI neuronal model in the human brain needs to explore in a much more mathematical sense and these ideas should be applied in deep learning models

-

In the human brain number of layers is very limited but connectivity between layers is enormously high. In all DL models, the number of layers is huge and connectivity between layers is less. This clearly indicates the need for improvement in the current models. Learning from very limited data points or learning viewpoint independent features might be possible by such kind of connectivity/ architecture

Conclusion

All the tasks which google assistant, Facebook, Alexa, or Siri perform are really interesting and can undoubtedly be regarded as huge leaps in the field of Artificial Intelligence. There still are a lot of core questions in AI that are completely unsolved, and even largely unasked. We need to put forth those questions, and try to answer these questions, get inspired by nature (Brain) and draw inferences from it, because there could potentially be an incredible number of tasks which the ‘intelligent machines’ would do much better than us, and that too with incredible precision, accuracy, and speed.

Feel free to add comments and also share your thoughts… Thank you for your time.

Leave a Comment